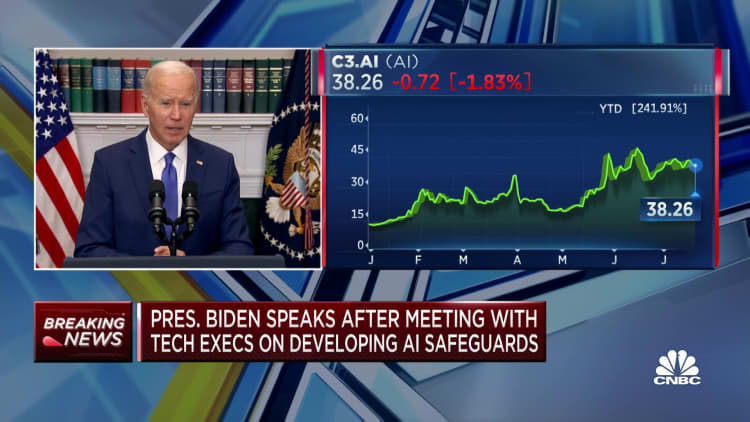

When leaders at technology giants including Amazon, Google, Meta, and Microsoft met with President Joe Biden last month, they pledged to follow artificial intelligence safeguards designed to make sure their AI tools are safe before releasing them to the public.

Among their commitments is robust testing of AI systems to guard against one of the most significant areas of risk: cybersecurity.

The role that generative AI plays in making ransomware attacks and phishing schemes easier and more ubiquitous is not lost on chief information security officers and other cyber leaders trying to stay on top of this fast-moving technology.

"Cyber vulnerabilities are becoming democratized," said Collin R. Walke, head of law firm Hall Estill's cybersecurity and data privacy practice. More and more individuals have the capabilities of hackers, using things like ransomware-as-a-service and AI, and for CISOs and other cyber leaders, the rapid adoption of generative AI "changes the threat landscape tremendously," he said.

For example, the use of generative AI has made phishing attacks easier and more authentic looking. "It used to be that when an employee got a phishing email it was somewhat easy to tell it was a fake because there was just something off about the wording," Walke said. With generative AI, a non-English-speaking bad actor can instantly and nearly flawlessly translate an email into any language, making it harder for employees to spot the fakes.

But cyber experts say the same AI tools that enable hackers to move faster and with greater scale are also available to companies looking to fortify their cybersecurity capabilities. "Yes, attackers can automate, but so can the defenders," said Stephen Boyer, co-founder and chief technology officer of cyber risk management firm BitSight. "AI makes the bad attacker a more proficient attacker, but it also makes the OK defender a really good defender."

Boyer said AI will enable engineers writing code to automatically check for vulnerabilities, resulting in more secure code. "There are tools that do that now, but AI is going to speed it up incredibly," he said.

In fact, using AI to amplify speed and scale in cybersecurity is among the biggest benefits experts see coming in the near term.

Using the technology for tasks that are difficult and time-consuming will be a huge benefit for CISOs, said Michael McNerney, chief security officer at cyber insurance company Resilience. "Taking inventory of all your devices, all end-points and applications is very complex, laborious and, quite frankly, a pain. I can see a future where AI helps a CISO get the lay of the land and helps them understand their network quickly and thoroughly," he said.

He added that since so much of cybersecurity is hygiene, using AI to help streamline tasks that are well understood and highly repetitive would be of tremendous value.

Still, Walke and others caution against complacency setting in now that some of Silicon Valley's biggest companies are taking Biden's AI safeguard pledge. "We still have a lot of people in AI companies around the world that are going to continue to abuse the system, that are going to continue to develop the technology without adequate legal or ethical rules in place."

For the foreseeable future, cyber experts say a company's best defense is an "all-hands-on-deck scenario" where CISOs work with the board, the chief risk officer, and the CEO to determine how — and when — AI is deployed across the organization. "Just two months ago, OpenAI suffered a data breach hack, so if they're vulnerable, one CISO in a company isn't going to be able to handle the complications and potential risks of AI," Walke said.

In the meantime, it's important to keep AI and its potential impact in context. "We're at the peak of the hype cycle but I also think that's natural," McNerney said. "We have a very exciting, very powerful emerging technology that few people truly understand. I think over the next year, cyber leaders are going to figure out where AI is really useful and where it's not."